By Keith Warriner, Lara Jane Warriner and Mahdiyek Hasani, Department of Food Science, University of Guelph, Guelph, Ontario

Corresponding author: [email protected]

I recall sitting in the office one day in the fall of 2018 when I received a call from a reporter who informed me that there had been a further outbreak of E. coli O157:H7 linked to romaine lettuce. After an initial response of “Oh no,” the reporter asked why we continue to have outbreaks linked to lettuce? It was a valid question, and all I could answer is that the regulators and industry do the same thing after an outbreak but expect a different outcome.

The typical play made by the Leafy Green Marketing Association (LGMA) and Food and Drug Administration (FDA) is to coordinate a response where the former comes out with positive messaging and the later says it is going to improve food safety standards. In the aftermath of the latest outbreak linked to romaine lettuce contaminated with E. coli O157:H7, the FDA rolled out the Smart Farming initiative that was essentially furthering the aim of introducing Blockchain but also promoting increase testing. The LGMA also came out with new irrigation water standards, given the eight outbreaks linked to contaminated romaine lettuce could be attributed to contaminated water.

Test, test, test

It is instinctive for policy makers and the media to call for increased testing to address food safety issues. It also is a good “go-to-solution” for industry to appease those who press for testing as it is easier to go with the flow. The reality is that testing is expensive, and a balance needs to be struck to provide assurance to retailers and consumers without burdening the industry in a sector which works on razor-thin margins. Therefore, maximizing the value of testing is key, which means pausing to reflect on how test programs were developed and why have they been deficient to date – but importantly, how they can be improved.

A brief history of irrigation water testing

Robert Koch and his research team started developing general growth media to cultivate microbes in the 1870s, then rolled out the first version in 1881. It took another nine years to further develop media, introduce gelatin – then later agar – as a solidifying agent and invent the petri dish. Similar to these modern times, the new tool of being able to enumerate and isolate microbes was focused in the medical field and then trickled down to the food sector.

The first documented microbiological criteria in the food sector was published in 1905 within the water standards Edition 1. At that time, the incubation conditions for enumerating total count was 20° C for 48h. The incubation temperature of 20° C was based on practical considerations rather than microbiological, given that the gelatin solidifying agent would melt above 28° C. Soon after the water testing standards were introduced, the dairy sector developed microbiological criteria for milk. At this time, agar was starting to be introduced, thereby enabling incubation temperature to be increased to 37° C where mesophilic bacteria of concern to human health could be enumerated. This posed an issue to the early microbiology test labs as now they would require two incubators, with one to perform water testing and the other for dairy analysis. Eventually, 34° C was selected as a standard incubation temperature to enable laboratories to have a single incubator – hence the Standard Plate Count was born.

The story of how the Standard Plate Count came to be is reflective on how development of standard methods was influenced by practicalities and often is a compromise rather than being based on sound science.

Development of microbiological criteria

Growth media developed over the years to enable selective enumeration of key microbial groups; then later, formulations for specific pathogens. The next (if not the current) problem is how to interpret such data – what is a good count and what is bad? The first microbial criteria were based on observations whereby physicians would develop criteria based on levels of microbes recovered from food and water implicated in outbreaks. The microbiological criteria of foods further were developed and eventually led to the establishment of International Commission on Microbiological Specifications for Foods (ICMSF) in the 1960s. The science of risk assessment and statistics evolved into acceptance testing providing the basis for sampling plans along with criteria. However, it came evident in the end of the 1980s that testing was limited as a food safety net, which subsequent led to the introduction of Hazard Analysis of Critical Control Points (HACCP) system.

Establishing the microbial criteria

for irrigation water in leafy green production

The standards for irrigation water were developed by the FDA and LGMA following the E. coli O157:H7 outbreak linked to spinach that occurred back in 2006. The powers-that-be knew they needed to come up with a sampling plan and microbiological criteria. I am sure they looked at the potable water standards but then decided that these only could be achieved if disinfection treatment was applied akin to drinking water. Given this was too costly, the LGMA went down a level and selected recreational waters with the reasoning, maybe, that if humans can swim in it then it should be safe to irrigate crops. The FDA were on board with the idea and the standards later were adopted under the Final Fresh Produce rule under FSMA.

However, the LGMA didn’t fully embrace the EPA recreational standards as this would have meant screening for enterococci, in addition to generic E. coli, then further indicators upon detecting a positive sample. Moreover, the EPA did not state the frequency of testing, although most states implemented weekly testing for recreational water which increased to daily if a contamination event potentially could occur (for example, after a flood event). The FDA proposed weekly testing of irrigation water, but the LGMA considered one to two samples per season was sufficient.

A long-standing debate on water testing is how indicators, such as generic E. coli, reflect the actual safety status of a water source. It was known early in water testing that the gastrointestinal tract harbored coliforms and hence an indicator for fecal contamination, thus presence of enteric pathogens. The US Public Health Service took this logic and, in the 1960s, came up with a microbiological criteria based on coliform counts. To come up with a number, the health officials went through historical date and then noted an outbreak linked to contaminated water occurred when coliform counts were 2300 cfu/100ml. The National Technical Advisory Committee took this level and then suggested a standard of 235 MPN/100 ml should be adopted, which exists to this day.

Response to Escherichia coli O157:H7 outbreaks

linked to romaine lettuce 2018-2020

The irrigation water standards were built on a shaky foundation and perhaps it was no surprise that the series of outbreaks linked to romaine lettuce occurred. The LGMA still needed to come up with plans to tighten the irrigation water standards. The results were a somewhat convoluted solution, although it did have some positive aspects with respect to risk assessment and preventative controls.

The new irrigation water standards recognized two water sources, with Type A being one that has low risk of being contaminated with enteric pathogens – for example, well or ground water. In contrast, Type B are sources such as surface water which are susceptible to contamination. Type A water testing takes six water samples (three at the start and then a further three samples seven days later) with a pass being when five of the six samples have generic E. coli <10 MPN/100 ml. A further set of samples is taken 21 days before harvest if the water is to be applied for overhead irrigation. In the event a sample is out of compliance, then a root-cause analysis can be performed and a second set of samples taken. If the second set of test passes, then the first failed tests can be ignored.

With Type B water, the water is screened for coliforms (<99 MPN/100 ml) and generic E. coli (<10 <MPN/100 ml). The water cannot be used for overhead irrigation unless being decontaminated with a process that supports a 2 log reduction of coliforms. Type B water can be used up to 21 days before harvest and must be sampled monthly with no one sample being greater than 235 MPN/100 ml (average of 126 MPN/100 ml). If the water source fails to meet the standard, the final crop should be tested for E. coli O157:H7, Salmonella and Listeria monocytogenes.

Will the new LGMA standards make a difference?

Although the rollout of the LGMA microbiological standards was received positively by the industry, government and media, inherent weaknesses persist. Putting aside the “Mulligan” in responding to a failed irrigation water test, the question is whether the increase in sampling will make a difference? In short, it will make little difference and can be illustrated using operational characterization curves. As an example, the beef industry routinely performs N60 sampling of beef trim when screening for E. coli O157:H7. Assuming 1% prevalence of the pathogen, taking 60 samples only would provide a probability of detection of 55%. Therefore, the sample numbers under LGMA would have a low probability of detecting contamination.

Is it time for a rethink on how testing is performed?

Although the series of outbreaks linked to romaine lettuce would suggest an industry in crisis, the reality is that 80% of those events occurred within a small geographical region of California. This implies that the vast majority of irrigation water is safe with contamination occurring by an unexpected, sporadic event. Therefore, rather than having a rigid sampling plan, it should be more of an investigative type. For example, with ground water there is evidence that the level of the water table can be linked to contamination of ground water. Flooding or high precipitation events increases the likelihood of contamination of irrigation water.

Test sediments rather than water

The traditional approach of collecting water is to take grab samples of 100 to 500 ml. An alternative approach is to apply concentration methods such as Moore Swabs or tangential hold filtration to collect samples over a longer time period or increase sample volumes. The approach was applied by the FDA during evidence collection in the E. coli O157:H7 romaine lettuce investigation. Yet, it was when the FDA sampled the canal sediments that they finally recovered the strain that was implicated.

Contaminants that enter surface water systems readily can be retained within the environment by adsorbing into stream sediments. Therefore, by screening sediments, a history of the water can be assessed with regard to exposure to pesticides and heavy metals, in addition to human pathogens. In addition, sediments shift over time depending on the flow of the river and geographical features. Through imaging and modelling, it is possible to predict sediment movement and identify where contaminants could accumulate, hence sampled. Therefore, the approach holds the promise of a custom approach to determining the risk of surface or ground waters. It could be envisaged that by using next-generation sequencing (NGS) could identify pathogens (virial, bacterial and enteric protozoan) in sediments rather than depending on indicators such as generic E. coli and coliforms.

Bringing light to irrigation water treatment

Any microbial testing program always will be limited in detecting pathogens or indicators. As the prevalence of the target decreases, the number of samples required to give a reasonable probability of detection increases. For example, if we assume that there is a 1% prevalence, then over 300 samples of water or sediment would need to be taken to detect the target. Contamination is encountered sporadically, even with a matrix such as water, which further reduces the chance of catching the target if present. This does not mean that water monitoring is futile but should be applied to verify the efficacy of interventions rather than as a safety net.

Not all water sources are the same

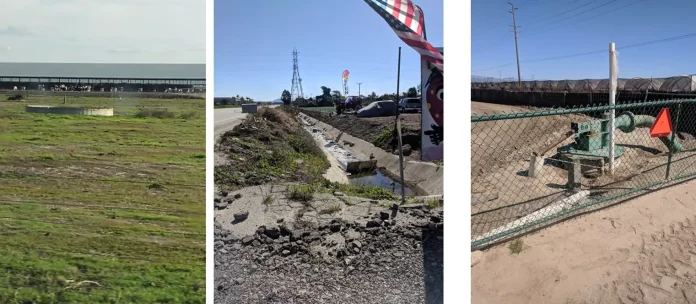

Irrigation water used in leafy green production can be derived from various sources based on availability and allowances. Surface water is most used with a labyrinth of canals intertwining between fields of leafy greens. The water is open to contamination from high-concentration animal farms and environmental sources (for example, animals), in addition to urban and industrial sources. Consequently, the quality of surface water can vary significantly and has been implicated in several of the more recent E. coli O157:H7 outbreaks linked to lettuce. Ground or well water are additional sources of irrigation water (Figure 1). Here, water is drawn from an aquifer which is contracting due to the ongoing draughts. There is a theory that the dwindling aquifer in California is causing sediments that harbor pathogens to become dispersed in the water and then onto the growing crops. Therefore, although ground water generally is less turbid than surface water, the risk of contamination by human pathogens exists.

Spectral analysis of water

The current risk management practice for irrigation water is based on testing that ultimately is limited by the small number of samples and volumes tested. With the advent of imaging technologies and big data, the area of hyperspectral analysis has expanded in recent years. The principle of the approach is to gather data over a spectrum of frequencies, then identify unique signatures through machine learning. The method increasingly has been applied to determine the distribution of nutrients within fields or assessing the time of harvest. With regard to irrigation water, it is feasible to collect spectra of water over time to defined an assessment of quality and then identify spectra characteristic of the presence of fecal contamination. The hyperspectral analysis also provides a means for continuous monitoring of irrigation water and alarm when a contamination event occurs.

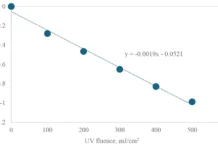

Ultraviolet treatment of irrigation water

UV-C treatment of water first was performed on a large scale in 1910 in France and, within six years, applied in the US to treat drinking water – the rest, as the saying goes, is history. Therefore, given the long history, one would expect that UV-C disinfection of irrigation water would represent few technical challenges. However, in reality, treatment of irrigation water represents challenges unique to the field environment. For example, farms can be in remote regions with no immediate source of power, which means novel energy generation approaches that operate day and night with low maintenance. However, the more challenging aspect to overcome is the variation in water turbidity that can vary from clear to murky (NTU >30). Yet, turbidity caused by suspended solids is not the only barrier to UV-C as some waters can have a high loading of soluble solids that are strongly absorbing. For example, water commonly contains humic and fulvic acids derived from plant decay. Ions such as Fe also are strong UV-C absorbers. Consequently, even if the NTU is relatively low (<20 NTU), the efficacy of UV-C to reduce microbial levels is low despite the application of doses in the order of 20 mJ/cm2 that would ordinarily result in 5 log count reduction of bacteria such as E. coli. A further challenge exists in the photo-repair (photo-reactivation) of microbes post-UV treatment. Here, the damage to DNA can be repaired via exposure to light within the range of 300 to 580 nm. The photoreactivation of E. coli and the parasite Giardia in UV-C treated water has been reported, although the degree to which this happens in irrigation water has yet to be assessed.

Combining UV-C with other technologies

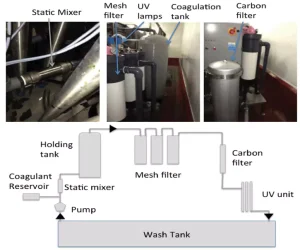

When looking at irrigation water disinfection, there often is the consideration to select a single treatment – for example, chemical vs. UV disinfection vs. filtration. Yet, each method has advantages and disadvantages, with none being ideal. Therefore, it makes sense to harness the advantages of each or introduce an additional step to overcome limitations. Considering UV-C treatment, the limitation of turbidity can be overcome through applying mixing/turbulent reactors such as Dean Flow or Tylor Couette but more easily by microfiltration. A microfiltration process has to be balanced between removing insoluble solids without significantly increasing energy requirements or promoting biofilm formation to block pores. This can be achieved using a preliminary coagulation step followed by filtration in a series of mesh filters (60 to 300 µm) and UV-C. When the system was used to treat spent wash water from spinach washing, the turbidity was decreased by 10 NTU and total aerobic count reduced by >2.00 log CFU that was below the level of enumeration (Figure 2).

A further pretreatment of irrigation water prior to UV-C is a pre-oxidation step that degrades the ultraviolet light-absorbing constituents, thereby increasing the anti-microbial efficacy. Pre-oxidation is a process that can be applied in wastewater treatment to reduce the chlorine demand of water. The treatments use a chemical oxidant such as ozone, permanganate or other oxidizing species. The inclusion of hydrogen peroxide in the presence of ferric-catalyst also can be applied in a process representing an Advanced Oxidation Process. The approach has the benefits of reducing the UV-C absorbing species, thereby enhancing the efficacy of ultraviolet treatment without leaving residues as observed for disinfection using chlorine or peracetic acid.

Future directions

Irrigation water quality issues will continue, especially in the leafy green growing regions that are experiencing drought along with the presence of animal production facilities. The current reliance on testing to catch contaminated irrigation water is expensive and limited. Risk management strategies are required that are effective but have no impact on the soil or plants. In this regard, chemical sanitizers have been negatively perceived despite the cost and ease of application.

Yet, UV-C offers an alternative but has to go beyond just installing a ultraviolet reactor. Instead, reactor design needs to consider the variability in turbidity and low molecular weight UV-absorbing constituents that negatively impact on treatments. Although the combined treatments for irrigation water disinfection are promising, they need to be balanced with complexity and cost. Yet, UV-C based technologies show a promising approach to risk management of pathogens associated with irrigation and the reduction of the food safety risks associated with leafy greens.

Further reading

BANACH, J.L., HOFFMANS, Y., APPELMAN, W.A.J., VAN BOKHORST-VAN DE VEEN, H. & VAN ASSELT, E.D. 2021. Application of water disinfection technologies for agricultural waters. Agricultural Water Management, 244.106527.

WANG, H., HASANI, M, WU, F & WARRINER, K. 2022. Pre-oxidation of spent lettuce wash water by contineous advanced oxidation process to reduce chlorine demand and cross-contamination of pathogens during post-harvest washing. Food Microbiology, 3891240.

WARRINER, K. & NAMVAR, A. 2013. Recent advances in fresh produce post-harvest decontamination technologies to enhance microbiological safety. Stewart Postharvest Review 1:1-8.

Projects of interest

Agriculture water treatment – Southwest region. Center for Fresh Produce Safety. PI Channah Rock. https://www.centerforproducesafety.org/researchproject/455/awards/Agriculture_Water_Treatment_Southwest_Region.html

Demonstration of practical, effective and environmentally sustainable agricultural water treatments to achieve compliance with microbiological criteria. Center for Fresh Produce Safety PI: Ana Allende. https://www.centerforproducesafety.org/researchproject/374/awards/Demonstration_of_practical_effective_and_environmentally_sustainable_agricultural_water_treatments_to_achieve_compliance_with_microbiological_criteria.html

Professor Keith Warriner is part of the faculty of the Department of Food Science, University of Guelph, and undertakes research in the area of food microbiology with a focus on food safety of fresh produce. Research is directed toward studying pathogen: plant interactions and developing pre- and post-harvest decontamination methods that include application of UV. For more information, contact Warriner at [email protected], (519) 824-4120 or @kwarrine on Twitter.